Using OpenAI models

eventcatalog@2.35.7EventCatalog Chat can be configured to use any OpenAI model.

This let's you talk to your architecture using the power of OpenAI models.

To use OpenAI models, you need to bring your OpenAPI keys to EventCatalog.

Installation

1. Setup your license keys

First, you need to get a license key for EventCatalog.

EventCatalog Chat is a paid feature, you can get a 14 day free trial of the EventCatalog Starter Plan on EventCatalog Cloud.

Once you have a license key, you can put it into your .env file.

EVENTCATALOG_SCALE_LICENSE_KEY=<your-license-key>

OPENAI_API_KEY=<your-openai-api-key>

2. Configure the OpenAI model

Next, you need to configure the OpenAI model. Add the following to your eventcatalog.config.js file.

EventCatalog will default to the o4-mini model. You can select from a range of models, see the models section for more information.

chat: {

// enable the chat or not (default true, for new catalogs)

enabled: true,

// Tells EventCatalog that we want to use OpenAI

provider: 'openai'

// OpenAI model to use (see a list of models in the models section)

model: 'o4-mini',

}

You can also set temperature, topP, topK, frequencyPenalty, and presencePenalty for the OpenAI model. These have sensible defaults, but you can override them if you want to.

How to override the defaults for the OpenAI model

You can fine-tune the OpenAI model to your needs by setting the temperature, topP, topK, frequencyPenalty, and presencePenalty.

chat: {

// enable the chat or not (default true, for new catalogs)

enabled: true,

// Tells EventCatalog that we want to use OpenAI

provider: 'openai'

// OpenAI model to use (see a list of models in the models section)

model: 'o4-mini',

// defaults to 0.2

temperature: 0.5,

// defaults to nothing

topP: 1,

// defaults to nothing

topK: 1,

// defaults to 0

frequencyPenalty: 0,

// defaults to 0

presencePenalty: 0,

}

3. Configure EventCatalog to run on a server

You need to configure EventCatalog to run on a server, see the EventCatalog documentation for more information and the hosting options.

// rest of the config...

// Default output is 'static', but you can change it to 'server'

output: 'server'

Running EventCatalog on a server allows you to keep your API keys safe. EventCatalog will make a request to its server side code, to get a response from the OpenAI model. Your keys are never exposed to the client side code. You can use our DockerFile to run EventCatalog on a server, see the EventCatalog documentation for more information.

Run EventCatalog

Once you have installed and configured the plugin, and enabled the chat in the eventcatalog.config.js file, you can run the catalog.

npm run generate

npm run dev

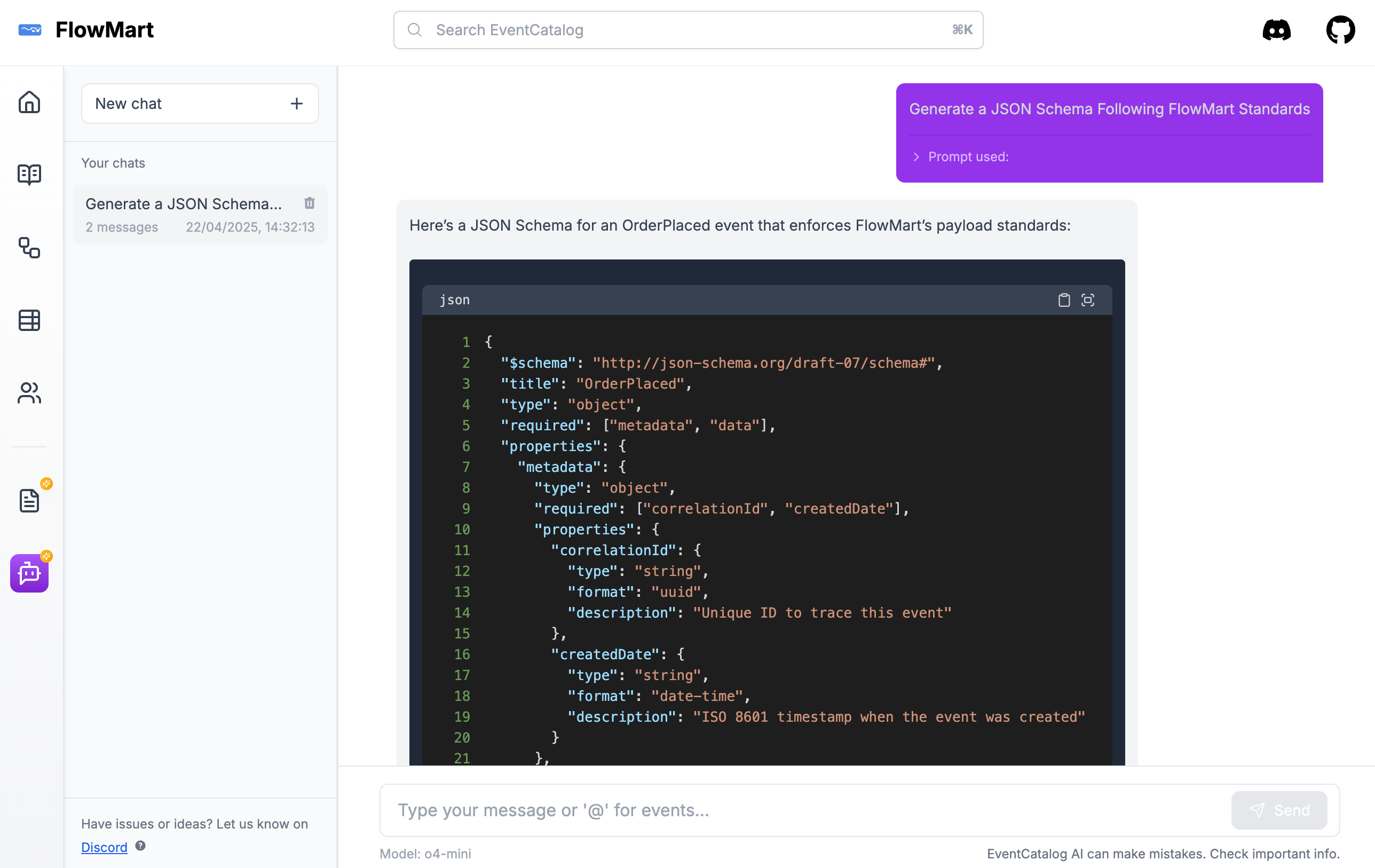

Navigate to the http://localhost:3000/chat and you should see the AI assistant.

You can bring your own prompts to EventCatalog Chat. This lets you tailor the chat experience to your organization and teams. See the bring your own prompts section for more information.

Configuration

You can configure the model, temperature, topP, topK, frequencyPenalty, and presencePenalty in the eventcatalog.config.js file.

chat: {

model: 'o4-mini',

temperature: 0.5,

topP: 1,

topK: 1,

frequencyPenalty: 0,

presencePenalty: 0,

}

Models

Here are a list of models that you can use with EventCatalog Chat.

o1o1-2024-12-17o1-minio1-mini-2024-09-12o1-previewo1-preview-2024-09-12o3-minio3-mini-2025-01-31o3o3-2025-04-16o4-minio4-mini-2025-04-16gpt-4.1gpt-4.1-2025-04-14gpt-4.1-minigpt-4.1-mini-2025-04-14gpt-4.1-nanogpt-4.1-nano-2025-04-14gpt-4ogpt-4o-2024-05-13gpt-4o-2024-08-06gpt-4o-2024-11-20gpt-4o-audio-previewgpt-4o-audio-preview-2024-10-01gpt-4o-audio-preview-2024-12-17gpt-4o-search-previewgpt-4o-search-preview-2025-03-11gpt-4o-mini-search-previewgpt-4o-mini-search-preview-2025-03-11gpt-4o-minigpt-4o-mini-2024-07-18gpt-4-turbogpt-4-turbo-2024-04-09gpt-4-turbo-previewgpt-4-0125-previewgpt-4-1106-previewgpt-4gpt-4-0613gpt-4.5-previewgpt-4.5-preview-2025-02-27gpt-3.5-turbo-0125gpt-3.5-turbogpt-3.5-turbo-1106chatgpt-4o-latest

You can find more information about the models in the OpenAI documentation.

Got a question? Or want to contribute?

Found a good model for your catalog? Please let us know on Discord. Or if you need help configuring your model, please join us on Discord.

Have a question?

If you have any questions, please join us on Discord.