EventCatalog now integrates with OpenAI

We’re excited to announce that EventCatalog now supports seamless integration with OpenAI.

This new feature allows you to harness the power of OpenAI models and embeddings directly within your catalog.

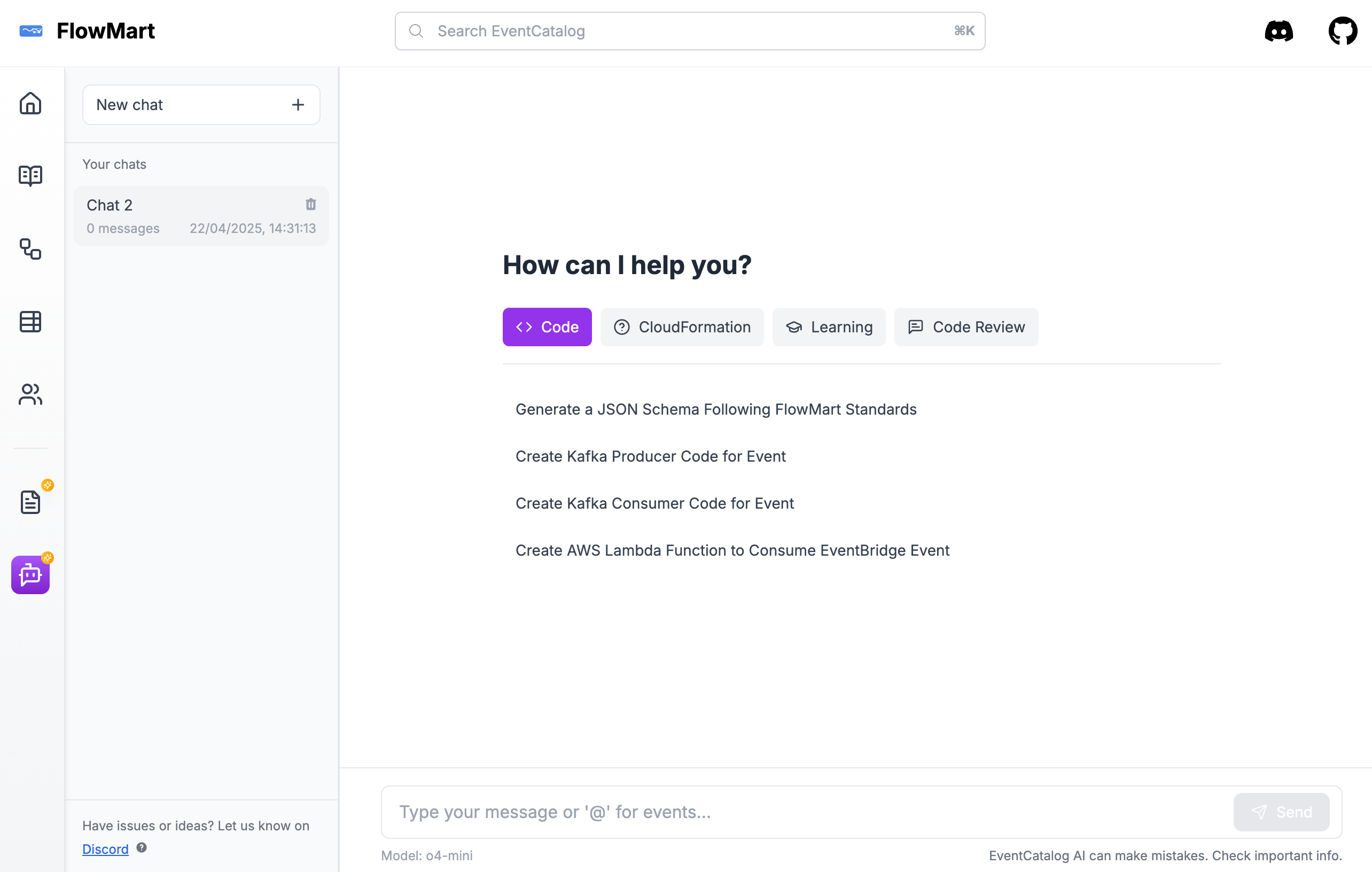

With this new feature you can bring your own OpenAI keys to EventCatalog and get architecture insights in seconds using EventCatalog Chat.

How does it work?

EventCatalog Chat transforms how your team interacts with your event-driven architecture. Instead of digging through documentation or tracking down who produces what, you can simply ask. Want to know what events exist, how schemas are structured, or who’s publishing and consuming them? Just chat with your catalog and get instant answers—saving you time and effort.

EventCatalog Chat can also generate code on the fly and supports custom prompts, allowing you to define reusable queries aligned with your organization's best practices and governance standards. Whether it's architecture insights or scaffolding code, your team can move faster with AI-powered support built right into your catalog.

Previously, EventCatalog Chat relied solely on browser-based models—open-source models that ran entirely within the browser environment. While this offered a lightweight and privacy-conscious option, we heard your feedback loud and clear. Now, we’re excited to introduce support for bringing your own OpenAI models to EventCatalog, unlocking even more powerful capabilities for exploring and understanding your architecture.

To get started you can read the EventCatalog Chat Guide or watch the video below.

Bring your own prompts

We're introducing a powerful new concept in EventCatalog Chat: Bring Your Own Prompts.

This feature allows teams and organizations to define custom prompts tailored to their own standards, best practices, and workflows. With predefined prompts, you can guide how EventCatalog Chat responds—ensuring consistency, compliance, and faster results across your team.

Here are a few examples of what you can do:

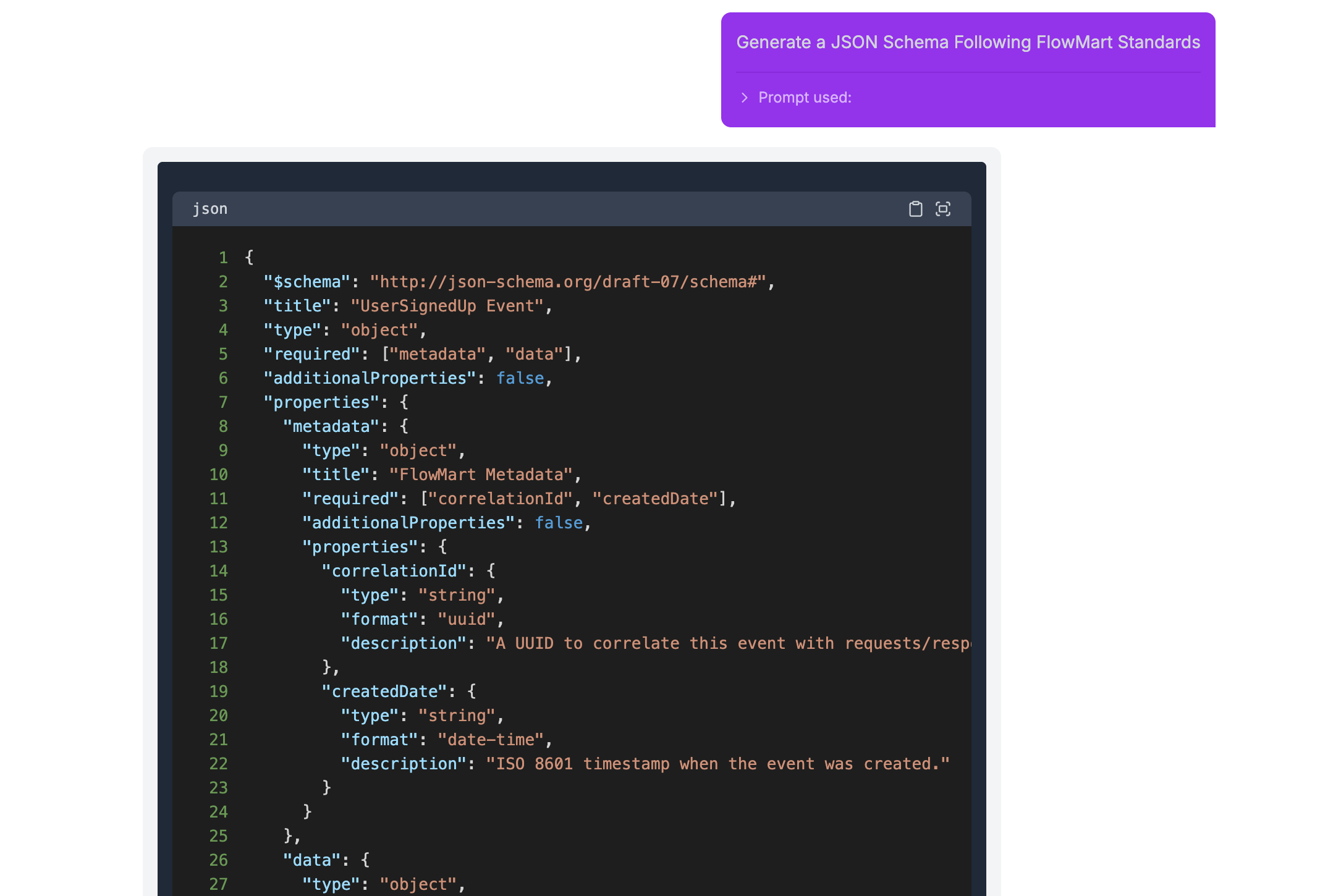

- "Generate a JSON schema Following FlowwMart Standards" (see example)

- "Create a Kafka producer code for event" (see example)

- "Create Kafka consumer code for event" (see example)

- "Create AWS Lambda function to Consume EventBridge Event" (see example)

With BYO Prompts, your event-driven architecture just got a whole lot smarter—and more tailored to you.

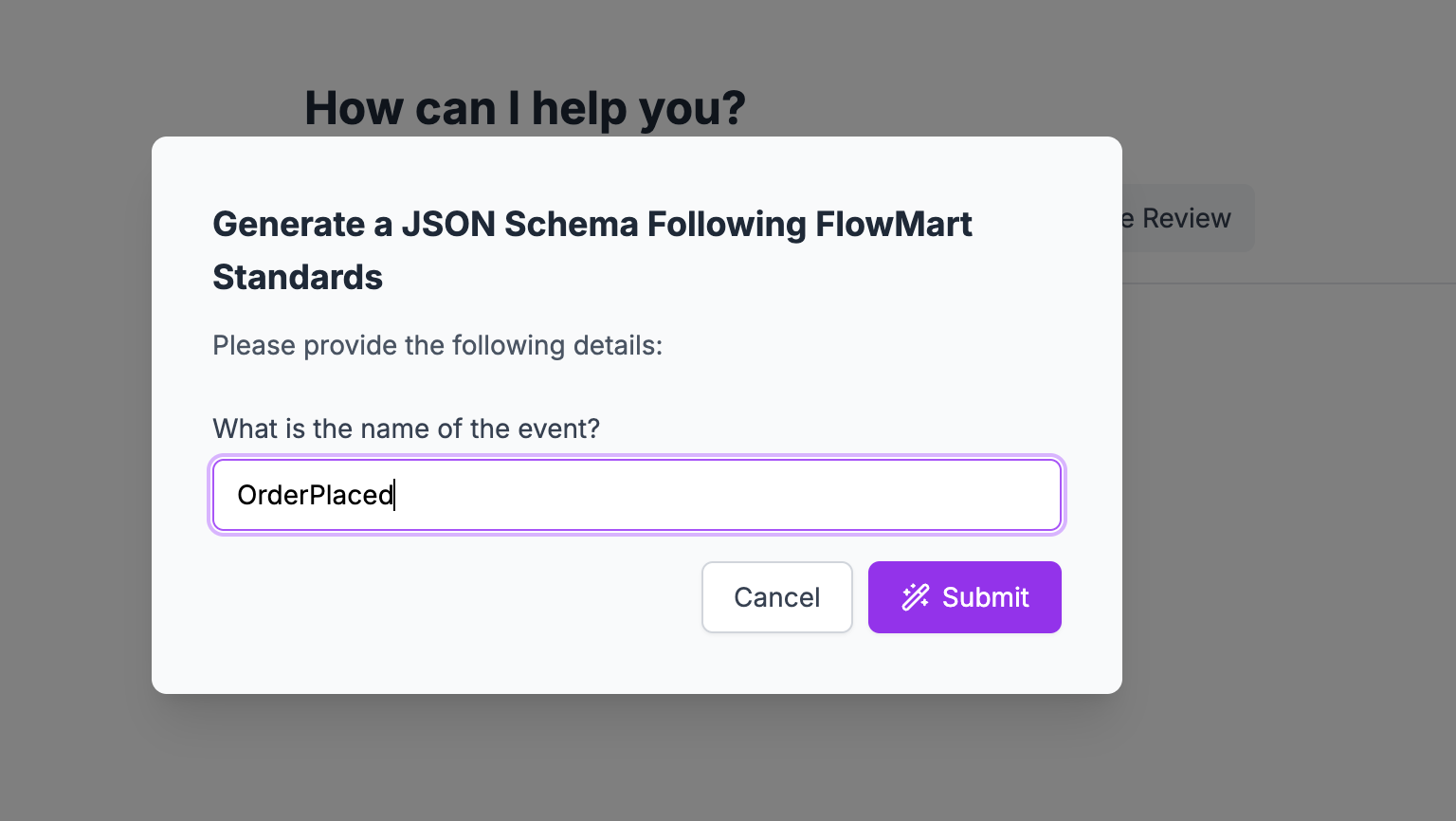

You can also make your prompts dynamic, allowing EventCatalog Chat to ask users for input before sending the prompt to your OpenAI model.

For example, if you want to generate a JSON schema that follows your company’s best practices, you can first prompt the user to enter the event name they’re working with. This adds flexibility and interactivity to your custom prompts—making them more useful across different teams and scenarios.

When the user submits the form, the AI model uses their input to generate a JSON schema—fully aligned with the standards and conventions your company defines. This ensures consistency while saving your team valuable time on repetitive tasks.

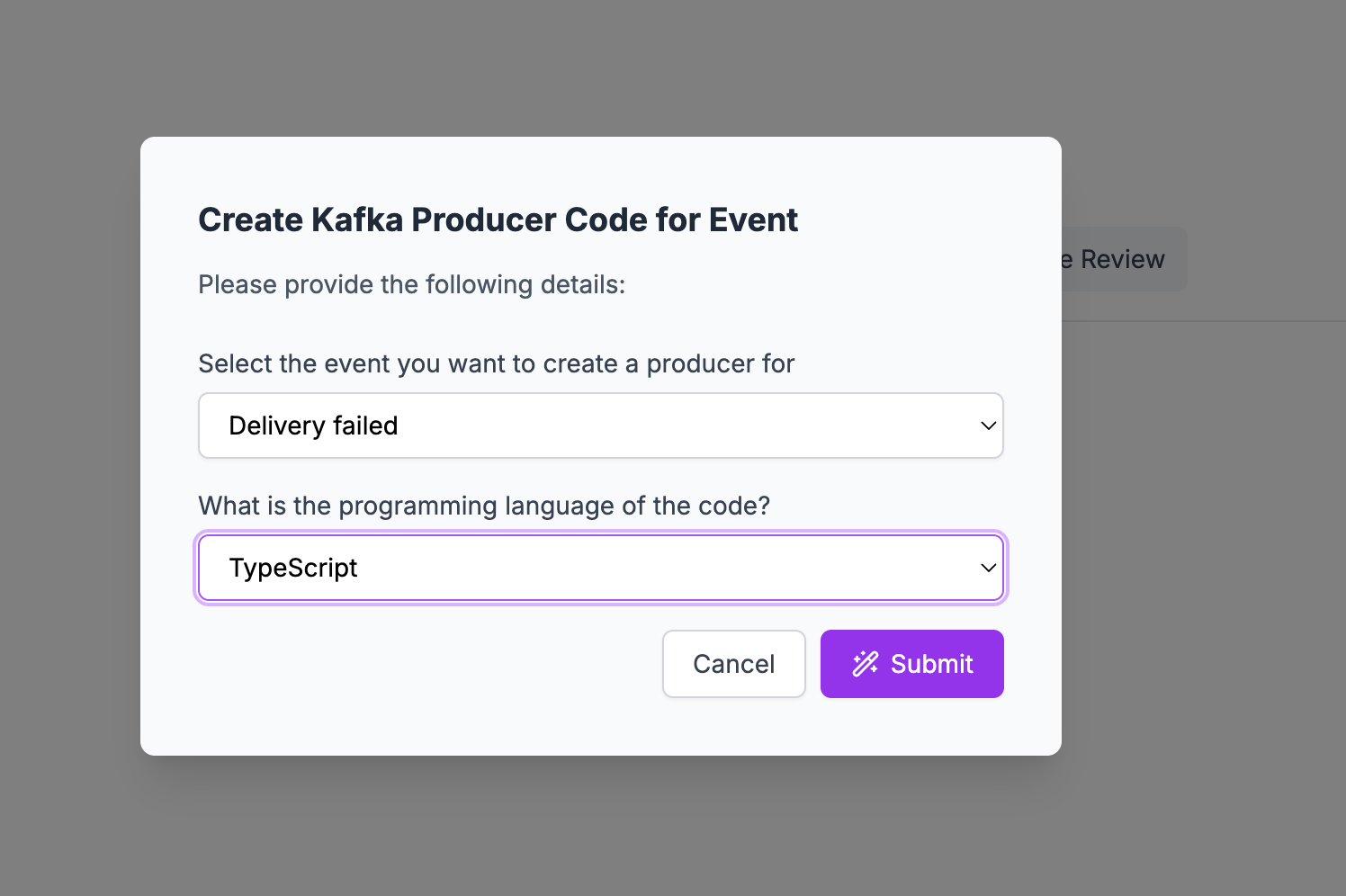

Custom prompts can also capture multiple inputs, making them even more powerful.

For instance, you can prompt users to select an event from a generated list based on your catalog, and choose a programming language from a predefined list. This gives your team a flexible and guided way to generate highly specific outputs—like language-specific producer or consumer code for a particular event.

The prompt is then sent to your OpenAI model, which generates the code based on the inputs provided. In the example above, a TypeScript Kafka producer is generated using the Delivery Failed event as the foundation—automatically tailored to your architecture and development standards.

How to get started

Check out our EventCatalog Chat Guide to get started. If you have any questions or want to connect with the community, feel free to join us on Discord.

Whats next?

We’re just getting started. Up next, we’re working on expanding model support in EventCatalog—including the ability to configure additional providers like Anthropic, giving you more flexibility and choice in how you power your architecture insights.

We’re also building features that let you ask questions directly within your documentation pages, so teams can get instant, contextual answers while exploring or working on your event-driven systems—saving even more time and reducing friction.

Have questions or feedback? Join us on Discord—we’d love to hear from you.

Want a tailored walkthrough? Book a custom demo—we’re happy to help!

FAQ

Can I configure which OpenAI model is used?

Yes, you can configure which OpenAI model is used by updating the model property in the eventcatalog.config.js file.

You can also configure which embedding model is used.

Is it secure?

Yes, it is secure. If you want to use your own OpenAI keys you can build EventCatalog as a server and bring your own OpenAI keys.

A new server output type is available in EventCatalog. This will output a server application that you can run locally or in a cloud provider.

You can read more about hosting a server in the EventCatalog documentation.